Transformer-based language models have significantly impacted artificial intelligence (AI) in recent years. These models are highly proficient in tasks related to natural language processing, such as machine translation, text generation, and sentiment analysis. However, as transformer models continue to become larger and more complex, it has become increasingly evident that making them more efficient and accessible poses a significant challenge.

Mixture-of-Depths (MoD) is a research paper by Google Deepmind. It presents an innovative approach to addressing challenges and creating more efficient and cost-effective transformer architectures.

What is Mixture-of-Depths (MoD)?

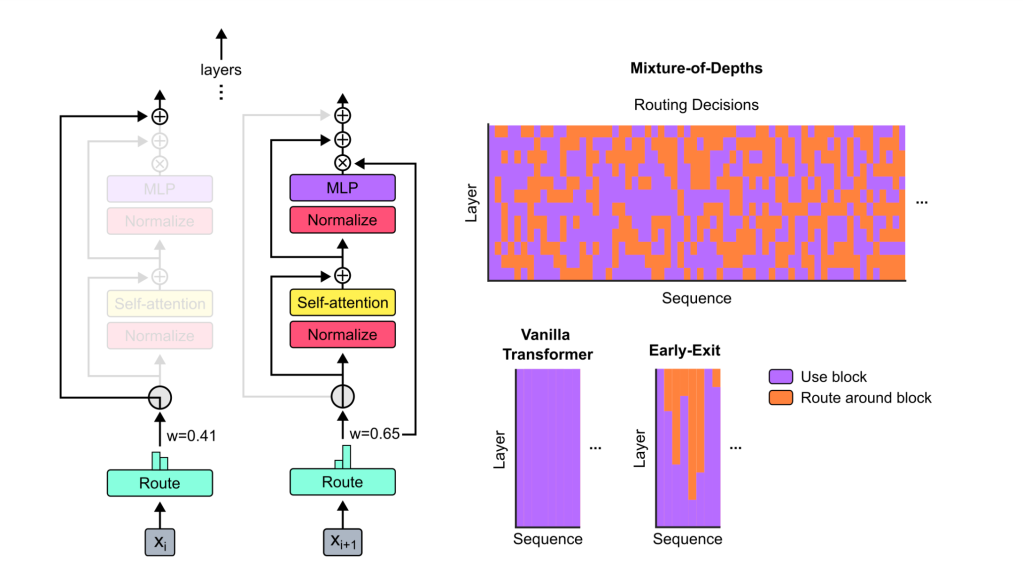

Mixture-of-Depths (MoD) is a new approach to transformer architectures incorporating dynamic token allocation and routing. In traditional transformer models, each token is processed uniformly across the model depth, regardless of its importance or complexity. However, MoD enables selective computation by allocating computational resources based on the significance of each token. This means that tokens of higher importance or complexity receive more processing, while less important ones are routed around certain layers. By dynamically determining which tokens require more processing, MoD aims to improve the efficiency and reduce the computational cost of transformer models without compromising their performance. This innovative technique shows great promise in advancing the capabilities of transformers and enabling them to handle more complex tasks.

How Mixture-of-Depths Works

- Here’s a brief explanation of how the Mixture-of-Depths (MoD) works.

- Static Compute Budget: MoD sets a static compute budget by limiting the number of tokens that can participate in a block’s computations (self-attention and MLP) at each layer.

- Per-Block Routers: At each layer, MoD uses a component called a “router” to assign a numerical score to each token. This score represents how important or relevant the token is for the current computation. Tokens with higher scores are considered more important.

- Top-k Token Selection: Based on the scores assigned by the router, MoD selects the most important tokens (called the “top-k” tokens) to be processed at each layer. The number of tokens selected is determined by the compute budget set by the user.

- Routing Schemes: During training, the model learns to assign router weights in a way that prioritizes the most important tokens for each input sequence(expert-choice routing). By learning to route tokens efficiently, MoD can achieve better performance while using fewer computations compared to traditional transformer models.

- Mixture-of-Depths-and-Experts (MoDE): MoD can be combined with another technique called Mixture-of-Experts (MoE), which involves using multiple specialized subnetworks (called “experts”) within each layer. By integrating MoD with MoE, the resulting model can benefit from both dynamic token selection and expert specialization, leading to even better performance and efficiency.

Key Components of Mixture-of-Depths Transformers

Now that we have seen how MoD works let’s take a deep dive into its key components.

1. Static Compute Budget

At the core of MoD lies the concept of a static compute budget, which determines the maximum number of tokens that can participate in the computations at each transformer block. By setting this budget to a value lower than the total number of tokens, MoD effectively reduces the computational cost while still allowing for dynamic token allocation.

To understand more about this compute budget, let’s quickly examine the notion of capacity in the transformer Architecture. (The capacity in this context is not the capacity of the model as commonly used to mention the size of the model, which is about the relative size of the model to the data set (Small or large model)).

The capacity of a transformer refers to the total number of tokens that are part of the input for a given computation. This token capacity determines the total number of Floating-Point Operations per second (FLOP/s) for transformers that use conditional computation.

Researchers believe it is possible to reduce the computation compared to traditional transformer by reducing the capacity of computations. However, using a smaller computing budget randomly can result in performance degradation. The researchers hypothesize that certain tokens may not require as much processing as compared to others, and these tokens can be identified through learning. Therefore, if the network learns to select the right tokens to fill up its capacities, it will preserve its performance even with lower compute.

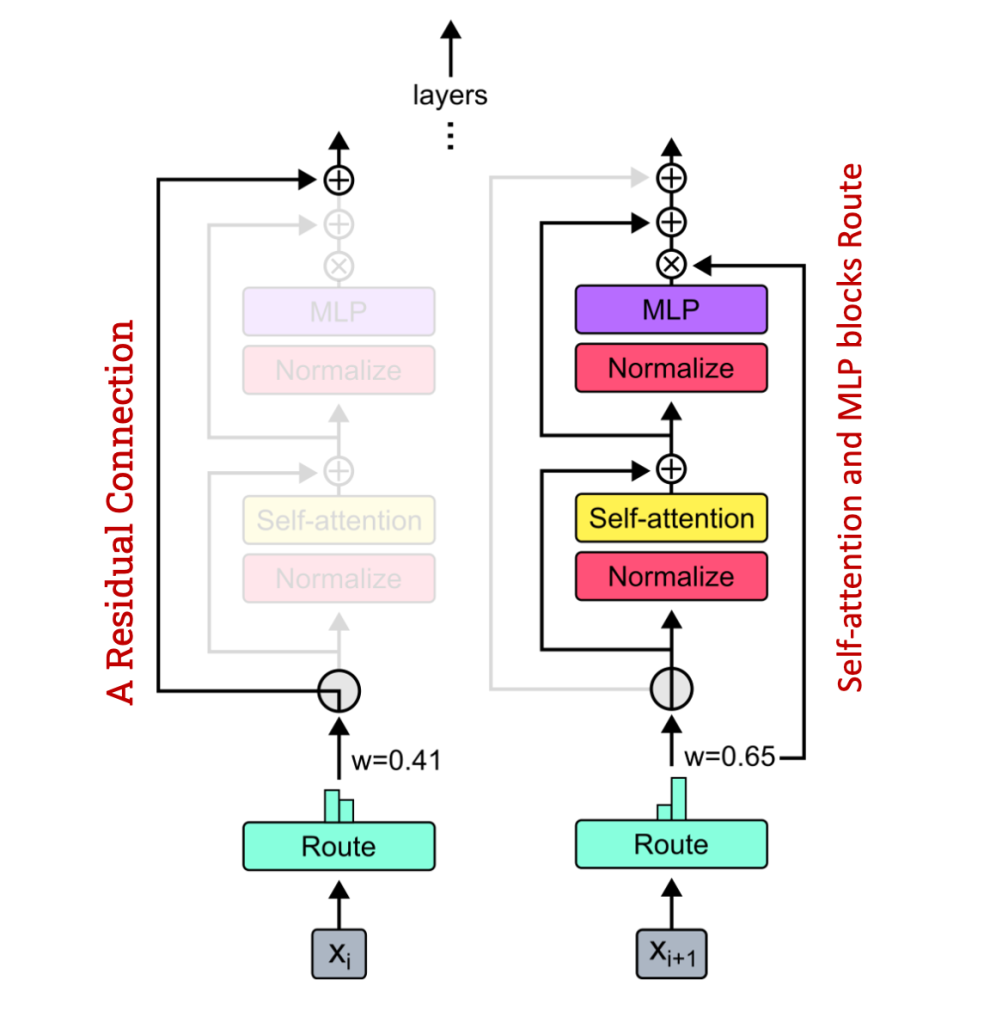

2. Per-Block Routers (Routing around transformer blocks)

The per-block routers play another crucial component in the MoD architecture. These routers assign weights to each token based on their importance and relevance to the current computation. The router weights are generated through a linear projection of the token embeddings, capturing the contextual information necessary for informed routing decisions.

- A Token in an MoD transformer can take one of two computational paths,

- Self-attention and MLP(Multi-Layer Perceptron) blocks (Computationally expensive)

- A Residual Connection. (Computationally cheap),

In an MoD transformer, each block has its own router that assigns a scalar weight to each token in the input sequence. These router weights represent the router’s preference for each token to either undergo the block’s computations or skip them.

In other words, these weights determine the importance of each token and guide the routing decisions throughout the model depth.

3. Efficient Routing Schemes

In the Mixture-of-Depths (MoD) approach, routing schemes determine how tokens are allocated to different computational paths within the transformer.

The paper discusses two main routing schemes:

- Token-choice routing

- Expert-choice routing.

1. Token-choice routing:

In this scheme, each token independently chooses its preferred computational path based on the router weights assigned to it. The router produces a probability distribution over the available paths for each token, and the token is assigned to the path with the highest probability.

Advantages:

- Allows tokens to have more control over their computational path

- This can potentially lead to more specialized processing for each token

Disadvantages:

- This may result in an imbalanced load across different computational paths

- Some paths may receive more tokens than others, leading to inefficient resource utilization

2. Expert-choice routing:

In expert-choice routing, each computational path (or “expert”) selects the top-k tokens based on their router weights. This ensures that each path receives an equal number of tokens (determined by the capacity k).

Advantages:

- Guarantees a balanced distribution of tokens across computational paths

- Optimizes resource utilization by ensuring that each path processes an equal number of tokens

- Allows paths to select the most relevant tokens for their specialized processing

Disadvantages:

- Tokens have less control over their computational path

- Some tokens may be selected by multiple paths, while others may not be selected at all

The paper focuses on expert-choice routing for MoD transformers, which offers improved load balancing and resource utilization. Expert-choice routing is a more suitable approach for the MoD method, where tokens can be routed to either the main computational path (self-attention and MLP) or a residual connection.

By using expert-choice routing, the MoD transformer can ensure that the most important tokens are processed by the main computational path while the less important tokens are routed through the residual connection.

The routing schemes in MoD are learned jointly with the rest of the transformer during training. The router weights, which determine the token allocation, are updated based on the language modeling objective, allowing the model to learn optimal routing strategies for the given task.

4. Top-k Token Selection

MoD selects the top-k tokens based on their router weights to maintain static computation graphs and ensure efficient processing. Only these selected tokens participate in the self-attention and MLP computations, while the remaining tokens are routed around the block.

In MoD transformers, each block has a router that assigns a scalar weight to each token, indicating the token’s importance or relevance to the current computation. After obtaining the router weights, the top-k tokens with the highest weights are selected to participate in the block’s computations. The value of k is determined by the user-defined capacity, which sets the maximum number of tokens that a block can process.

The top-k selection process works as follows:

- Router weights: The router assigns a scalar weight to each token based on its relevance to the current computation.

- Sorting: The tokens are sorted in descending order based on their router weights.

- Selection: The top-k tokens with the highest router weights are selected to participate in the block’s computations. The remaining tokens are routed through the residual connection.

The top-k token selection has several advantages:

- It allows the MoD transformer to focus its computational resources on the most important tokens, leading to more efficient processing.

- By selecting a fixed number of tokens (k) for each block, it ensures a static computation graph and known tensor sizes, which is compatible with current hardware constraints.

- It enables the model to dynamically adapt its computation based on the input sequence, allocating more resources to challenging or informative tokens.

However, the top-k selection process also introduces a challenge during autoregressive sampling. Since the selection depends on the router weights of all tokens in the sequence, it is non-causal, meaning that the selection of a token depends on future tokens that have not been generated yet. To address this issue, the authors propose using a predictor-based routing approach, where an auxiliary predictor module learns to mimic the top-k behavior while relying only on past token information.

Integration with Mixture-of-Experts (MoE) – Mixture-of-Depths-and-Experts (MoDE)

The Mixture-of-Depths (MoD) approach can be seamlessly integrated with Mixture-of-Experts (MoE) architectures, resulting in a combined model called Mixture-of-Depths-and-Experts (MoDE). This integration allows the model to benefit from both dynamic token routing and expert specialization.

Mixture-of-experts (MoE) is a technique in which multiple expert networks are introduced within a layer of a transformer model. Each expert is a separate neural network specializing in processing specific input types. A gating mechanism determines which expert should process each input token, allowing the model to capture complex patterns and learn specialized knowledge more efficiently.

When integrating MoD with MoE, there are two main approaches:

Staged MoDE:

- In this approach, the MoD routing is performed before the MoE routing.

- Tokens are first routed to either participate in the block’s computations or bypass them using the MoD routing mechanism.

- The tokens that participate in the block’s computations are then processed by the MoE layer, where they are routed to different expert networks based on the MoE gating mechanism.

- This approach allows for a more flexible and dynamic allocation of computational resources.

Integrated MoDE:

- In the integrated approach, the MoD and MoE routing are combined into a single routing step.

- The routing mechanism is extended to include an additional “no-op” expert, representing the MoD approach’s residual connection.

- Tokens are routed to either one of the expert networks or the “no-op” expert based on their router weights.

- This approach simplifies the routing process and allows for a more unified treatment of MoD and MoE.

The benefits of integrating MoD with MoE include:

- Improved efficiency: By combining dynamic token routing with expert specialization, MoDE models can allocate computational resources more effectively, focusing on the most relevant tokens and expert networks for each input.

- Enhanced performance: The integration of MoD and MoE allows the model to capture complex patterns and learn specialized knowledge more effectively, leading to improved performance on downstream tasks.

- Flexibility: MoDE models offer a flexible framework for combining different routing and expert selection strategies. This enables researchers to explore various configurations and find the optimal balance between efficiency and performance.

The experimental results in the paper demonstrate that combining MoD and MoE models into MoDE models synergistically improves performance.

Why is it Significant

Numerous experiments have been carried out to assess the effectiveness and efficiency of MoD transformers. The outcomes reveal that MoD consistently surpasses isoFLOP-optimal baselines by achieving lower loss values while necessitating fewer FLOPs per forward pass. This results in significant speed gains at each step, leading to faster training and inference.

For example, an MoD transformer with 220M parameters slightly outperforms the isoFLOP-optimal baseline (also 220M parameters) but is up to 60% faster to step during training.

Moreover, MoD transformers exhibit memory savings, particularly in larger models. By dynamically allocating tokens and reducing the computational burden, MoD allows for more efficient utilization of memory resources. This is particularly beneficial in resource-constrained environments or when deploying models on edge devices.

Autoregressive evaluation of MoD transformers has shown promising results, with predictor-based routing enabling efficient and causal inference. The models maintain competitive performance while significantly reducing computational costs.

The Mixture-of-Depths-and-Experts (MoDE), further boosts the benefits. MoDE variants, such as staged and integrated MoDE, leverage the strengths of both dynamic token routing and expert specialization, leading to compound improvements in performance and efficiency.

Future Directions and Potential Extensions

The implementation of Mixture-of-Depths has paved the way for more efficient transformer architectures. There are several exciting directions and potential extensions to explore, including:

- Decoupled Routing: Investigating the possibility of separating the routing decisions for queries, keys, and values in self-attention, allowing for more precise control over token participation.

- Long-Term Memory Mechanisms: Using learned token retention to establish long-term memory mechanisms that enable transformers to capture and utilize contextual information over extended sequences.

- Incorporating Diverse Computations: Exploring the integration of specialized computations, such as memory lookup or tool use, within the MoD framework to enhance the model’s capabilities for specific tasks.

- Integration with Other Techniques: Combining MoD with other optimization techniques, such as pruning, quantization, or knowledge distillation, to further improve efficiency and performance.

Conclusion

Mixture-of-Depths (MoD) is an efficient and accessible transformer architecture that uses dynamic token routing and a static compute budget to achieve remarkable performance gains while reducing computational costs. It democratizes access to state-of-the-art AI capabilities, paving the way for new innovations and breakthroughs in the field of natural language processing. The principles and techniques introduced by MoD have the potential to inspire further research and exploration in other domains of AI. MoD serves as a shining example of innovation, efficiency, and accessibility in the quest for efficient transformer architectures.

Key Links

Research Paper : Mixture of Depths

Authors: David Raposo, Sam Ritter, Blake Richards, Timothy Lillicrap, Peter Conway Humphreys, Adam Santoro

Leave a comment